极智项目 | YOLO11目标检测算法训练+TensorRT部署实战

- 项目获取:https://download.csdn.net/download/weixin_42405819/89930107

- 项目作者:极智视界

- 项目init时间:20241001

- 项目介绍:对YOLO11基于coco_minitrain_10k数据集进行训练,并使用py TensorRT进行加速推理,包括导onnx和onnx2trt转换脚本

- 项目参考:YOLO11部分参考 => https://github.com/ultralytics/ultralytics (这是YOLO11算法的官方出处)

2. 算法训练

(1) 数据集整备

数据集放在 datasets/coco_minitrain_10k数据集目录结构如下:

datasets/└── coco_mintrain_10k/├── annotations/│ ├── instances_train2017.json│ ├── instances_val2017.json│ ├── ... (其他标注文件)├── train2017/│ ├── 000000000001.jpg│ ├── ... (其他训练图像)├── val2017/│ ├── 000000000001.jpg│ ├── ... (其他验证图像)└── test2017/├── 000000000001.jpg├── ... (其他测试图像)

(2) 训练环境搭建

conda creaet -n yolo11_py310 python=3.10conda activate yolo11_py310pip install -U -r train/requirements.txt

(3) 推理测试

先下载预训练权重:

bash 0_download_wgts.sh执行预测测试:

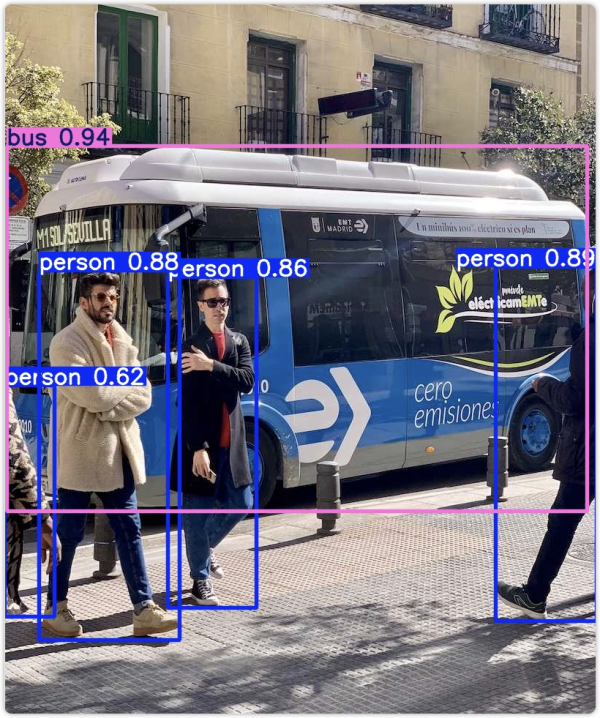

bash 1_run_predict_yolo11.sh预测结果保存在 runs 文件夹下,效果如下:

(4) 开启训练

已经准备好一键训练肩膀,直接执行训练脚本:

bash 2_run_train_yolo11.sh其中其作用的代码很简单,就在 train/train_yolo11.py 中,如下:

# Load a modelmodel = YOLO(curr_path + "/wgts/yolo11n.pt")# Train the modeltrain_results = model.train(data= curr_path + "/cfg/coco128.yaml", # path to dataset YAMLepochs=100, # number of training epochsimgsz=640, # training image sizedevice="0", # device to run on, i.e. device=0 or device=0,1,2,3 or device=cpu)# Evaluate model performance on the validation setmetrics = model.val()

主要就是配置一下训练参数,如数据集路径、训练轮数、显卡ID、图片大小等,然后执行训练即可

训练完成后,训练日志会在 runs/train 文件夹下,比如训练中 val 预测图片如下:

这样就完成了算法训练

3. 算法部署

使用 TensorRT 进行算法部署

(1) 导ONNX

直接执行一键导出ONNX脚本:

bash 3_run_export_onnx.sh在脚本中已经对ONNX做了sim的简化

生成的ONNX以及_simONNX模型保存在wgts文件夹下

(2) 安装tensorrt环境

直接去NVIDIA的官网下载(https://developer.nvidia.com/tensorrt/download)对应版本的tensorrt TAR包,解压基本步骤如下:

tar zxvf TensorRT-xxx-.tar.gz# 软链trtexecsudo ln -s /path/to/TensorRT/bin/trtexec /usr/local/bin# 验证一下trtexec --help# 安装trt的python接口cd pythonpip install tensorrt-xxx.whl

(3) 生成trt模型引擎文件

直接执行一键生成trt模型引擎的脚本:

bash 4_build_trt_engine.sh正常会在wgts路径下生成yolo11n.engine,并有类似如下的日志:

[10/02/2024-21:28:48] [V] === Explanations of the performance metrics ===[10/02/2024-21:28:48] [V] Total Host Walltime: the host walltime from when the first query (after warmups) is enqueued to when the last query is completed.[10/02/2024-21:28:48] [V] GPU Compute Time: the GPU latency to execute the kernels for a query.[10/02/2024-21:28:48] [V] Total GPU Compute Time: the summation of the GPU Compute Time of all the queries. If this is significantly shorter than Total Host Walltime, the GPU may be under-utilized because of host-side overheads or data transfers.[10/02/2024-21:28:48] [V] Throughput: the observed throughput computed by dividing the number of queries by the Total Host Walltime. If this is significantly lower than the reciprocal of GPU Compute Time, the GPU may be under-utilized because of host-side overheads or data transfers.[10/02/2024-21:28:48] [V] Enqueue Time: the host latency to enqueue a query. If this is longer than GPU Compute Time, the GPU may be under-utilized.[10/02/2024-21:28:48] [V] H2D Latency: the latency for host-to-device data transfers for input tensors of a single query.[10/02/2024-21:28:48] [V] D2H Latency: the latency for device-to-host data transfers for output tensors of a single query.[10/02/2024-21:28:48] [V] Latency: the summation of H2D Latency, GPU Compute Time, and D2H Latency. This is the latency to infer a single query.[10/02/2024-21:28:48] [I]&&&& PASSED TensorRT.trtexec [TensorRT v100500] [b18] # trtexec --onnx=../wgts/yolo11n_sim.onnx --saveEngine=../wgts/yolo11n.engine --fp16 --verbose

(4) 执行trt推理

直接执行一键推理脚本:

bash 5_infer_trt.sh实际的trt推理脚本在 deploy/infer_trt.py推理成功会有如下日志:

------ trt infer success! ------推理结果保存在 deploy/output.jpg

如下:

来源:极智视界

好文章,需要你的鼓励

联想推出跨设备AI智能体,挑战微软和谷歌

联想在CES展会上发布了AI助手Qira,该系统可跨联想和摩托罗拉设备生态运行,包括智能手机、可穿戴设备、PC和平板等。Qira不仅是聊天机器人,还能执行实际任务,如设备间文件传输。该系统具备情境感知能力,通过融合知识库创建个性化体验。联想强调隐私保护,优先本地处理数据。Qira将于2026年第一季度首先在联想设备上推出。摩托罗拉还展示了搭载Qira的AI可穿戴设备Project Maxwell概念产品。

剑桥大学突破性研究:如何让AI在对话中学会真正的自信判断

剑桥大学研究团队首次系统探索AI在多轮对话中的信心判断问题。研究发现当前AI系统在评估自己答案可靠性方面存在严重缺陷,容易被对话长度而非信息质量误导。团队提出P(SUFFICIENT)等新方法,但整体问题仍待解决。该研究为AI在医疗、法律等关键领域的安全应用提供重要指导,强调了开发更可信AI系统的紧迫性。

麦肯锡和General Catalyst高管:AI时代终结“一次学习,终身受用“模式

在CES 2026主题演讲中,麦肯锡全球管理合伙人鲍勃·斯特恩费尔斯和通用催化剂CEO赫曼特·塔内贾表示,AI正以前所未有的速度重塑技术领域。塔内贾指出,Anthropic估值在一年内从600亿美元飙升至数千亿美元,预计将出现新一波万亿美元公司。然而,非技术企业对AI全面采用仍持观望态度。两位高管强调,人们必须认识到技能培训和再培训将是终身事业,传统的22年学习40年工作模式已被打破。

威斯康星大学研究团队破解洪水监测难题:AI模型终于学会了“眼观六路“

威斯康星大学研究团队开发出Prithvi-CAFE洪水监测系统,通过"双视觉协作"机制解决了AI地理基础模型在洪水识别上的局限性。该系统巧妙融合全局理解和局部细节能力,在国际标准数据集上创造最佳成绩,参数效率提升93%,为全球洪水预警和防灾减灾提供了更准确可靠的技术方案。