极智项目 | YOLO11目标检测算法训练+TensorRT部署实战

- 项目获取:https://download.csdn.net/download/weixin_42405819/89930107

- 项目作者:极智视界

- 项目init时间:20241001

- 项目介绍:对YOLO11基于coco_minitrain_10k数据集进行训练,并使用py TensorRT进行加速推理,包括导onnx和onnx2trt转换脚本

- 项目参考:YOLO11部分参考 => https://github.com/ultralytics/ultralytics (这是YOLO11算法的官方出处)

2. 算法训练

(1) 数据集整备

数据集放在 datasets/coco_minitrain_10k数据集目录结构如下:

datasets/└── coco_mintrain_10k/├── annotations/│ ├── instances_train2017.json│ ├── instances_val2017.json│ ├── ... (其他标注文件)├── train2017/│ ├── 000000000001.jpg│ ├── ... (其他训练图像)├── val2017/│ ├── 000000000001.jpg│ ├── ... (其他验证图像)└── test2017/├── 000000000001.jpg├── ... (其他测试图像)

(2) 训练环境搭建

conda creaet -n yolo11_py310 python=3.10conda activate yolo11_py310pip install -U -r train/requirements.txt

(3) 推理测试

先下载预训练权重:

bash 0_download_wgts.sh执行预测测试:

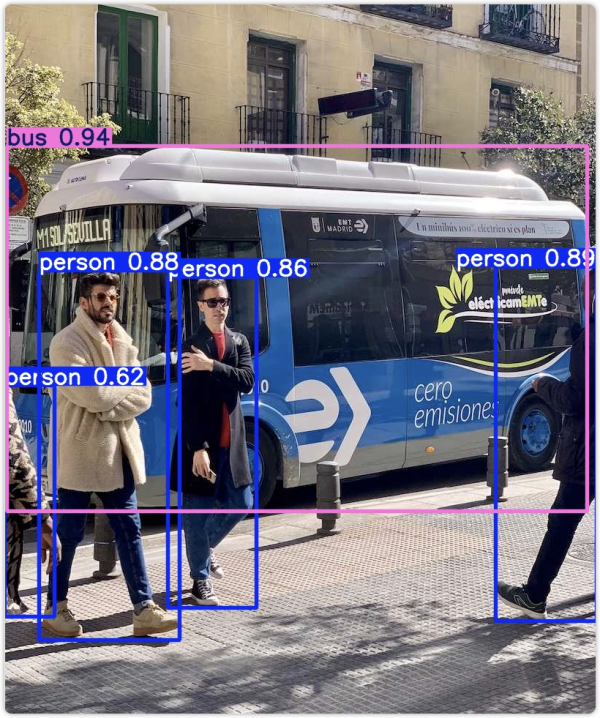

bash 1_run_predict_yolo11.sh预测结果保存在 runs 文件夹下,效果如下:

(4) 开启训练

已经准备好一键训练肩膀,直接执行训练脚本:

bash 2_run_train_yolo11.sh其中其作用的代码很简单,就在 train/train_yolo11.py 中,如下:

# Load a modelmodel = YOLO(curr_path + "/wgts/yolo11n.pt")# Train the modeltrain_results = model.train(data= curr_path + "/cfg/coco128.yaml", # path to dataset YAMLepochs=100, # number of training epochsimgsz=640, # training image sizedevice="0", # device to run on, i.e. device=0 or device=0,1,2,3 or device=cpu)# Evaluate model performance on the validation setmetrics = model.val()

主要就是配置一下训练参数,如数据集路径、训练轮数、显卡ID、图片大小等,然后执行训练即可

训练完成后,训练日志会在 runs/train 文件夹下,比如训练中 val 预测图片如下:

这样就完成了算法训练

3. 算法部署

使用 TensorRT 进行算法部署

(1) 导ONNX

直接执行一键导出ONNX脚本:

bash 3_run_export_onnx.sh在脚本中已经对ONNX做了sim的简化

生成的ONNX以及_simONNX模型保存在wgts文件夹下

(2) 安装tensorrt环境

直接去NVIDIA的官网下载(https://developer.nvidia.com/tensorrt/download)对应版本的tensorrt TAR包,解压基本步骤如下:

tar zxvf TensorRT-xxx-.tar.gz# 软链trtexecsudo ln -s /path/to/TensorRT/bin/trtexec /usr/local/bin# 验证一下trtexec --help# 安装trt的python接口cd pythonpip install tensorrt-xxx.whl

(3) 生成trt模型引擎文件

直接执行一键生成trt模型引擎的脚本:

bash 4_build_trt_engine.sh正常会在wgts路径下生成yolo11n.engine,并有类似如下的日志:

[10/02/2024-21:28:48] [V] === Explanations of the performance metrics ===[10/02/2024-21:28:48] [V] Total Host Walltime: the host walltime from when the first query (after warmups) is enqueued to when the last query is completed.[10/02/2024-21:28:48] [V] GPU Compute Time: the GPU latency to execute the kernels for a query.[10/02/2024-21:28:48] [V] Total GPU Compute Time: the summation of the GPU Compute Time of all the queries. If this is significantly shorter than Total Host Walltime, the GPU may be under-utilized because of host-side overheads or data transfers.[10/02/2024-21:28:48] [V] Throughput: the observed throughput computed by dividing the number of queries by the Total Host Walltime. If this is significantly lower than the reciprocal of GPU Compute Time, the GPU may be under-utilized because of host-side overheads or data transfers.[10/02/2024-21:28:48] [V] Enqueue Time: the host latency to enqueue a query. If this is longer than GPU Compute Time, the GPU may be under-utilized.[10/02/2024-21:28:48] [V] H2D Latency: the latency for host-to-device data transfers for input tensors of a single query.[10/02/2024-21:28:48] [V] D2H Latency: the latency for device-to-host data transfers for output tensors of a single query.[10/02/2024-21:28:48] [V] Latency: the summation of H2D Latency, GPU Compute Time, and D2H Latency. This is the latency to infer a single query.[10/02/2024-21:28:48] [I]&&&& PASSED TensorRT.trtexec [TensorRT v100500] [b18] # trtexec --onnx=../wgts/yolo11n_sim.onnx --saveEngine=../wgts/yolo11n.engine --fp16 --verbose

(4) 执行trt推理

直接执行一键推理脚本:

bash 5_infer_trt.sh实际的trt推理脚本在 deploy/infer_trt.py推理成功会有如下日志:

------ trt infer success! ------推理结果保存在 deploy/output.jpg

如下:

来源:极智视界

好文章,需要你的鼓励

Lumen升级400GB数据中心连接基础设施助力AI发展

Lumen Technologies对美国网络的数据中心和云连接进行重大升级,在16个高连接城市的70多个第三方数据中心提供高达400Gbps以太网和IP服务。该光纤网络支持客户按需开通服务,几分钟内完成带宽配置,最高可扩展至400Gbps且按使用量付费。升级后的网络能够轻松连接数据中心和云接入点,扩展企业应用,并应对AI和数据密集型需求波动。

阿里巴巴突破AI说话人视频生成技术壁垒:首次实现动作自然度、唇同步准确性和视觉质量的完美平衡

阿里巴巴团队提出FantasyTalking2,通过创新的多专家协作框架TLPO解决音频驱动人像动画中动作自然度、唇同步和视觉质量的优化冲突问题。该方法构建智能评委Talking-Critic和41万样本数据集,训练三个专业模块分别优化不同维度,再通过时间步-层级自适应融合实现协调。实验显示全面超越现有技术,用户评价提升超12%。

AI和流媒体推动,2030年面临“网络危机“

RtBrick研究警告,运营商面临AI和流媒体服务带宽需求"压倒性"风险。调查显示87%运营商预期客户将要求更高宽带速度,但81%承认现有架构无法应对下一波AI和流媒体流量。84%反映客户期望已超越网络能力。尽管91%愿意投资分解式网络,95%计划五年内部署,但仅2%正在实施。主要障碍包括领导层缺乏决策支持、运营转型复杂性和专业技能短缺。

UC Berkeley团队突破AI内存瓶颈:让大模型推理快7倍的神奇方法

UC Berkeley团队提出XQUANT技术,通过存储输入激活X而非传统KV缓存来突破AI推理的内存瓶颈。该方法能将内存使用量减少至1/7.7,升级版XQUANT-CL更可实现12.5倍节省,同时几乎不影响模型性能。研究针对现代AI模型特点进行优化,为在有限硬件资源下运行更强大AI模型提供了新思路。